Enemy AI in Reich

In this post I talk about the first implementation of the enemy AI that I’ve made for Reich, a turn-based tactics game.

After reading the excellent Introduction to Genetic Algorithms book by Melanie Mitchell, I got really interested in genetic algorithms and genetic programming and decided I would use GAs to create the enemy opponents’ AI in Reich.

Genetic algorithms are part of the evolutionary algorithms class and are inspired by the natural selection process. They use methods commonly seen in biology like mutation, crossover and selection to reach a solution for a specific problem. Solutions are randomly generated and are improved over generations by evaluating each solution’s fitness and mutating the solution’s code, crossing two solutions together and selecting the best ones over time.

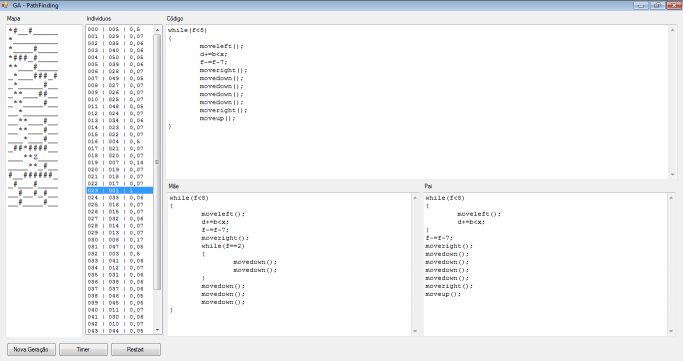

For Reich, I’ve decided that solutions would be small programs written in a c-like language that would instruct how each unit would move. To implement this I started by making an interpreter for that language, an algorithm to create random mini-programs, and another to mix and match programs according to their fitness. In the game, every CPU-controlled unit generates hundreds of these mini-programs and evaluates them over a few generations to decide which one to use for their movement.

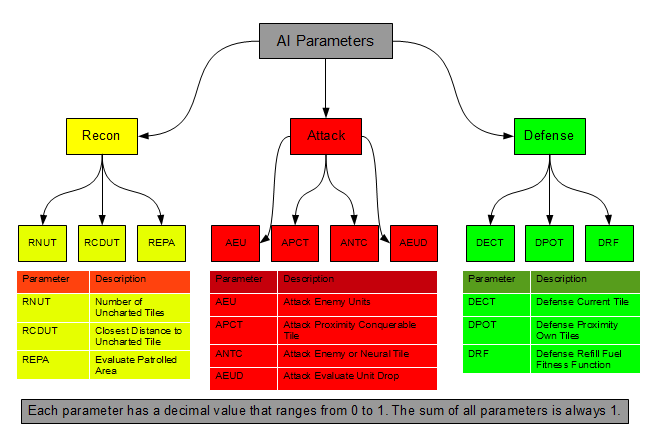

To evaluate how good a movement pattern is I have a series of fitness functions that each AI player uses. They are divided into three types:

- Recon: functions that evaluate how good the performance of a solution is at reaching new places and patrolling areas;

- Attack: functions that evaluate how good the performance of a solution is when it comes to getting closer to enemy units/structures and attacking them;

- Defense: functions that analyze how good a solution is at finding the best tiles to stand on, how close the unit is to structures that are under-attack and so on.

I then have variables that measure how much energy is allocated to each of the three types. At the end of the turn I record the game’s current state (number of player units, number of structures, amount of tiles that are under fog-of-war, etc.) and how effective the current parameter attributes were on advancing the computer’s position.

Later on the CPU will poll this information in order to adjust the parameters for a given game state. The mini-programs with the best fitness results are also recorded and polled on other turns, to get some inter-generational evolution as well.

Each CPU opponent has a set of variables that I’m calling AI parameters:

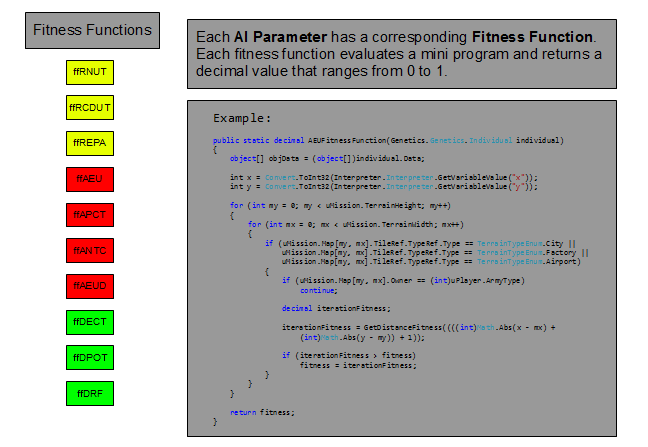

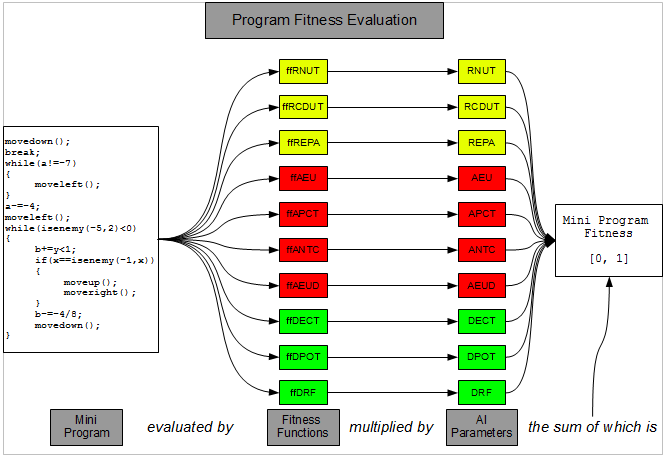

Each AI parameter has a corresponding fitness function, these basically attribute a score that ranges from 0 to 1 to each move that a program creates:

The AI parameters combined with the fitness functions help classify a given program:

This ends up shaping behavior without telling the unit what to do exactly: A bigger percentage attributed to the recon parameters, for instance, will have the unit roaming about the terrain trying to uncover foggy tiles. Having more in the attack will encourage aggressive behaviors - more proximity to enemy tiles, more attacks, etc.

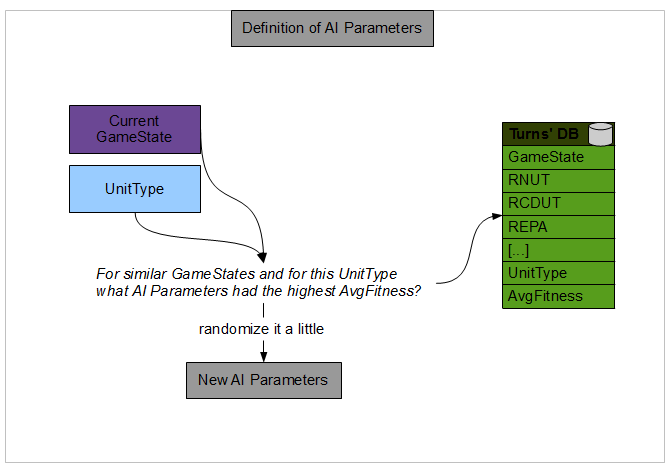

Every time the CPU starts a turn it defines how much of a percentage it is going to attribute to each parameter:

This is done by polling past records of turns and selecting the turns with the higher fitness for a given game state. By game state I mean how the CPU is perceiving the game in a current turn: How many units does the CPU have? How many enemy units do I see? How many tiles are uncovered?

After I get a sample of turns filtered by the state, I use the AI parameters for the ones with the best fitness and randomize them a little. The amount of variance I use when randomizing the parameters is a function of how many records I have on my database. I’m doing this because I believe that I can have a bigger confidence in the results as the number of recordings increase.

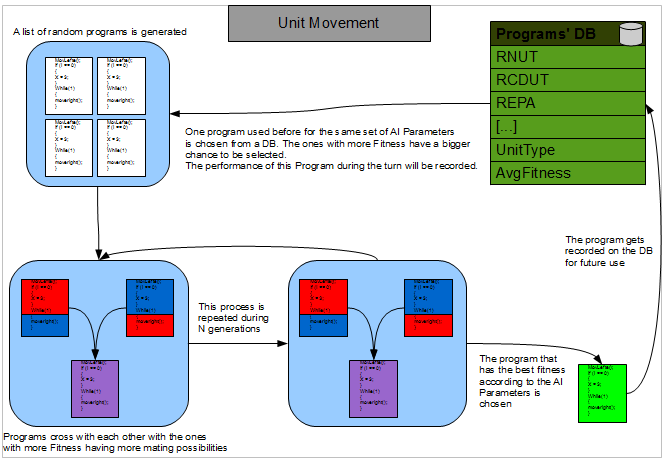

Generating the unit movement is standard GA practice:

Here, too, I have a data store with programs used in past turns. These are used to get some inter-generational evolution to complement the regular intra-generational one provided by a GA cycle. I filter the data store by the game state and randomly pick a program with the ones with the bigger average fitness having a bigger chance to be selected.

After this particular program is evaluated I record its performance back to the store. The best program to come out from the GA cycle is recorded there as well.

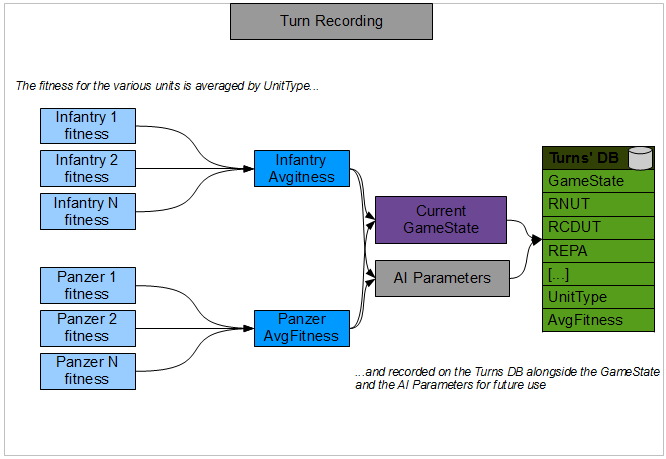

After the turn is done I record the performance of every unit averaging the fitness by its unit type, and the whole process is repeated in the next turn.

Generating unit moves like this created some interesting emerging behavior. However it also ended up being too resource-intensive for this task.

In the end, after watching some of Dave Mark’s presentations on AI and reading his book on the subject - Behavioral Mathematics for Game AI - I ended up using another technique called Utility System that I will discuss in a later post.